In a 2003 speculation (‘Are

You Living in A Computer Simulation?, Philosophical Quarterly,

In a sense, if the universe

is simulated, then we and all other life forms within it are “avatars”.

A first start is the

computing code for a von Neumann machine. This may comprise some 'N' formal

statements - that are used to specify the behavioral and other limits for the universe

simulator. The key question is whether these 'N' coded statements, comprising

the entire matrix of system information, can successfully be replicated at

lower hierarchical levels.

For the sake of argument, let 10 10 bits be associated with each statement in a von Neumann code (program). Let each machine processes the same entropy E = N x 10 10 bits/second. Here is the problem: all the statements in the code concern the behavior per se: what the von Neumann machine is to do. They include nothing about replicating itself! This replicative statement therefore exists outside the code - it is a meta-statement! One requires at minimum an Entropy:

E' = (N+1) x 10 10 bits/second

We can see, E' > E, so the message cannot be

transferred! The entropy exceeds the bounds of the information channel

capacity. Phrased another way, the statement for replication arises from outside the system of code, not from within its instructions.

In 1982, the Nobel-winning

physicist Richard Feynman made a notable (and as it turns out, prescient)

observation concerning a primitive “universal quantum simulator”.

Feynman speculated that to

obtain 300 nuclear spins, the quantum simulator would need only 300 quantum

bits or qubits. So long as one could program the interactions between qubits so

that they emulated the interactions between the 300 nuclear spins, the dynamics

would be simulated.

Was Feynman off his rocker? Not really! As it

turns out one of the best ways to generate simulations via a quantum computer

is to use nuclear spins. To see how this could work, study the accompanying diagram

with three versions of nuclear spin – to which each is assigned a wave state

vector in bracket form:

In this case, the nuclear

spin down state corresponds to 1>, the spin up state to 0> and there is a

combined state: 0> + 1>.. The last is a state of spin along the axis

perpendicular to the spin-axis. More to the point, the latter superposition

illustrates the property of quantum parallelism: the ability to compute or

register two states simultaneously, which is impossible for normal computers

(which register 0 OR 1, never both at one time – unless they are in glitch

mode!) . Thus, a quantum computer can manipulate two bits (qubits) at one time.

If there are a trillion such qubits, each can potentially register 1 and 0 in

combined wave bracket states, simultaneously.

It should also be easily seen that this combined

wave state is the analog of the superposition of states seen in the electron

double slit experiment, e.g. for

y

= y1

+ y2

Any qubit in a state (0) can be placed in the

double-qubit state by rotating it one fourth of a turn (as evident from

inspection of the diagram)

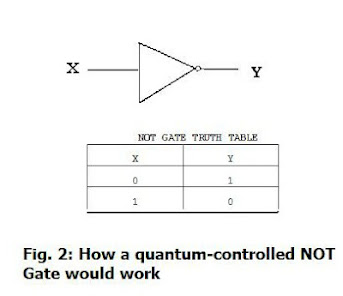

Much more fascinating (and to the point) is that

if another qubit is introduced into the scene - say with the same wave state

0> its presence can “flip” the original qubit , effectively producing a

quantum-controlled NOT operation which acts like a classical NOT gate

(see Fig. 2)

As readers will recall, it is

billions and billions of such logic gates which form the basis of computing.

Given that qubits also hold much more information than ordinary bits, it is

easy to see that if such nuclear spins can be used in the sort of logic gate

manner described, one can have the basis for a quantum computer.

If one can have such an entity, then one could

simulate just about anything. To use the words of Seth Lloyd (‘Programming the Universe’, p. 154):

“A quantum computer that

simulated the universe would have exactly as many qubits as there are in the

universe, and the logic operations on those qubits would exactly simulate the

dynamics of the universe.”

And further (ibid.)

“Because the behavior of

elementary particles can be mapped directly onto the behavior of qubits acting

via logic operations, a simulation of the universe on a quantum computer is

indistinguishable from the universe itself”

This is a profound statement!

It implies that if the universe was indeed a mammoth simulation, we’d likely

never be able to prove it or see outside of it. To us, locked inside as quantum

simulations - interacting dynamically with millions of other such simulations- it

would all appear quite real.

But recently there have been

some objections by contrarians who insist humans can still figure out if we’re

trapped in a “matrix’ or simulated universe.

Among the foremost proposals is to just look for serious “glitches” – or

inconsistencies – say in the measurements of physical constants. The hypothesis (or proposal) on which this is based essentially is that it's only necessary to make an imperfect copy of the universe - which would require less computational power- to fool the inhabitants.

In this kind of makeshift imitation cosmos the fine details would only be filled in by the programmers when people study them using scientific equipment. But as soon as no one is looking they'd simply vanish. This would be based on the Copenhagen QM principle that no actual observation of a system exists until an observation is made. Could we ever detect such disappearance events? Doubtful, given each time the simulators noticed we were observing they'd sketch them back in like an AI artist. This realization makes creating virtual cosmi eerily possible.

But let's get back to the detection using glitches like in a computer. In 2007, John Barrow at Cambridge University suggested that an imperfect copy of the universe would contain detectable glitches. Just like your laptop or Ipad, it would need updates to keep working. In such a case Barrow conjectured on example would be to see constants of nature like the speed of light, or the fine structure constant "drift" from their constant values. Such drift would be the giveaway.

Not long after, Silas Beane and colleagues at the Univ. of Washington- Seattle, suggested a more concrete test of the hypothesis. In this case based on the notion of a lattice - which cosmologists actually use to build up their models of the early universe. In such a case, the motion of particles within the simulation (and hence their energy) would be related to the distances between points of the lattice. Thus, we'd aways observe (for a simulation) a maximum energy amount for the fastest particles.

If space is continuous - rather than granular- no underlying grid would guide the direction of cosmic rays, for example. The takeaway is that if we inhabit a real universe of physical origin - not simulated- then cosmic rays ought to come in randomly from all directions. No preferred directions. On the other hand, if cosmic rays detect an uneven distribution (cosmic rays without random origins) it could indicate a simulated universe.

Ultimately, this cosmic ray test - above all others - may finally determine whether we are products of a biological (and cosmic) evolution beginning with the Big Bang or merely lines in an elaborate code forming an artificial multi-dimensional matrix. Personally, like Silas Beane, Nick Bostrom and others, I could care less which version holds. The meat special pizza I order will still taste the same, and the real planet Earth will remain as fucked up as it's always been. As George Carlin once put it: "We're all spectators at a giant shit show just waiting to see what happens next."

That Carlin take seems ever more valid as we wait to see the results of the midterm elections!

No comments:

Post a Comment