Frances Haugen testifies yesterday at Senate hearing.

"Facebook`s products harm children, stoke division and weaken our democracy." - Frances Haugen, yesterday at Senate hearing

Whistleblower Frances Haugen literally blew lawmakers' socks off yesterday, documenting how Facebook had become a cauldron of hatred and polarization. Further she has buttressed her claims with tens of thousands of pages of internal research documents she secretly copied before leaving her job in Facebook’s civic integrity unit. (Note: a FB talking head on the news last night disputed Frances was part of this unit, while also insisting Ms. Haugen "stole" the documents. No she didn't she copied them, the docs are still in Facebook's possession.)

Haugen also has filed complaints with federal authorities alleging that Facebook’s own research shows that it amplifies hate, misinformation and political unrest, but the company hides what it knows. In her words yesterday:

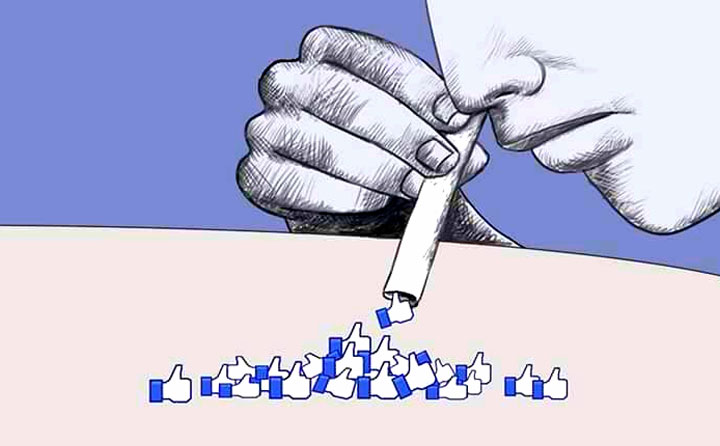

"Facebook knows that content that elicits an extreme reaction from you is more likely to get a click, a comment or reshare. They prioritize content in your feed, so you will give little hits of dopamine to your friends, and they will create more content."

After recent reports in The Wall Street Journal based on documents she leaked to the newspaper raised a public outcry, Haugen revealed her identity in a CBS “60 Minutes” interview aired Sunday night. She insisted that “Facebook, over and over again, has shown it chooses profit over safety.”

Who exactly is this ballsy former Facebook employee challenging the social network giant with 2.8 billion users worldwide and nearly $1 trillion in market value? She is a 37-year-old data expert from Iowa with a degree in computer engineering and a master’s degree in business from Harvard. She worked for 15 years prior to being recruited by Facebook in 2019 at companies including Google and Pinterest.

One internal study cited 13.5% of teen girls saying Instagram makes thoughts of suicide worse and 17% of teen girls saying it makes eating disorders worse. But this has all been predicted - years ago in fact - by computer scientist and data guru Tristan Harris.

It was Harris - over a year ago in an interview on Bill Maher's Real Time - who first pointed 0ut to the HBO audience the detrimental effects on teens:

"It's destroyed the mental health of our teenagers. It's polarized our societies, addicted each of us. It has really warped the psyche we're in the middle of with this election. Much like a psychotic patient who has a mind fractured against itself, our national psyche is now fractured against itself."

How did this increase the polarization? He told Maher at the time:

"Facebook took the shared reality we have and put it through a paper shredder, and gave each of us a micro-reality in which we are more and more certain that we're right and the other side is wrong. And it's totally confused us. We're ten years into this process now."

He went on to explain that even a man and wife on Facebook who might have the same friends, will nonetheless see completely different feeds, realities. These different news feeds are based on algorithms dictating what is most likely to keep each addicted to FB and its "likes" structure, which encourages spreading rot. Adding:

"Because of the competition for attention the companies starting to get really aggressive, about what they could dangle to get you to click, or come back."

Tristan Harris, on ALL In last night- reprised his role as a techie prophet of social media virality -noting to Chris Hayes how impressed he was with Ms. Haugen's testimony:

"What she said at the beginning of her hearing which is that this is something that everyone needs to know. And, you know, when she was looking at this inside information system in civic integrity at Facebook, she`s seeing genocides, she`s seeing misinformation, she`s seeing body dysmorphia issues. And she`s saying I shouldn`t be the only one who knows this."

Most importantly he emphasized how Facebook feeds the division. It knows anger-based engagement feeds the public anger feedback loop and makes political parties more divisive in the process. But they continue to feed the anger beast because it makes for higher profits and optimizes them. Most notably the cash cow is especially effective for the lower brows who get their news exclusively on Facebook. Thereby, minus comparative news from the original sources their brains are being subtly shaped, and scrambled.

Harris then went on to explain to Chris Hayes how Facebook has amped up the hate and division with supercomputer algorithms, and processing:

"Obviously, the society was polarized before social media even came to us, and partisan television and the rest of it. What`s different is when you have a supercomputer. So, Facebook is a trillion-dollar company. They have one of the largest supercomputers in the world. And when you scroll Facebook and you think you`re just seeing what your friends are doing, every time you scroll, imagine you activate a supercomputer pointed at your brain that has seen two billion people interact with it today.

And it knows that if you are someone who clicked on an article saying, the vaccine is unsafe, it says, oh, well, you`re just like these this other bucket of users. And this video over here that made fun of so many errors in the vaccine data or something like that, that worked really well for them.

So, we`re micro-targeting the thing that will make people most cynical and most hateful of their fellow countrymen and women. And we`ve got a trillion-dollar supercomputer that every time people scroll puts at the center of attention the next fault line in society. It`s like precision- guided weapons to find the next fault line in society."

Most enlightening to me was how people, e.g. in Europe, have noticed the change in the algorithm to make people more hateful- when before they were more agreeable. Harris pointed out an example of how a political party - say in Poland- used to be able to discuss agricultural policy and have people engage in a positive and benign way. Then, all of a sudden, traffic increased on sites only when negative things were said about opponents. As Harris concluded, in total alignment with Haugen:

"That`s why Facebook`s business model is impenetrable with democracy, full stop. It`s not just that it`s showing us harmful -- we have a content problem. It`s the morality. It`s about the business model that treats, as we were talking earlier, the commodification of human attention which means it`s that race to the bottom of the brain stem for our Paleolithic responses. And you`re sorting for the reactivity of society. "

The sad aspect is too few Americans know the risks social media poses to themselves and their children, not to mention how the country is being splintered by tribalism.

See Also:

by Amanda Marcotte | October 5, 2021 - 7:52am | permalink

No comments:

Post a Comment